Read more

Discussion Series, News

Media Literacy Going Digital

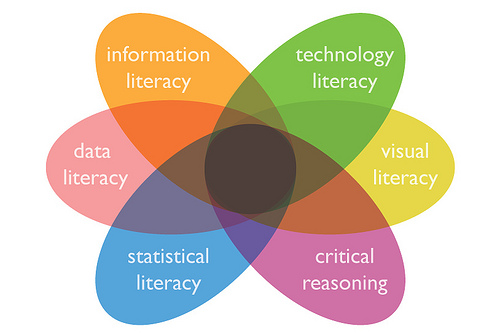

The media is increasingly going digital, providing citizens with opportunities to access a variety of sources, to express themselves and to share information. At the same time, people often lack the necessary skills to...

By Lisa Ginsborg

As is well known, the Internet poses a number of unprecedented challenges related to the limitation of freedom of expression (La Rue, 2014). The scale of the content which may be legitimately restricted, ranging from child pornography to hate speech, raises a number of complex new problems, including the question of establishing jurisdiction for illegal behaviour in the cross-border Internet world, and the role of internet intermediaries with respect to content regulation (Benedek and Kettemann, 2014). The public policy debate concerning self-regulation of the internet remains deeply ambivalent across different regions of the world (Tambini et al., 2014).

In Europe, areas traditionally covered by the State are being handed over to private actors, with limited fundamental rights safeguards, while the ambiguities characterising EU regulation create a chilling effect on freedom of expression (MacKinnon et al., 2014). EU law clearly prevents Member States from imposing a general obligation on providers of intermediary services to monitor the information which they transmit or store, nor a general obligation actively to seek facts or circumstances indicating illegal activity.[1] The CJEU has consistently held that such an obligation would undermine European fundamental rights standards, in particular with respect to Article 8 and Article 11 of the Charter on Fundamental Rights.[2] However, some of the most central problems with respect to internet content regulation remain unanswered, including avoiding intermediary liability while ensuring human rights ‘respect’ by key players (‘macro-gatekeepers’?) which are increasingly central to the democratic landscape (Laidlaw, 2010), and the tension between the expediency and advantages offered by an industry self-regulating versus the need to ensure freedom of expression (Tambini et al, 2014). Intermediaries continue to operate in a very complex legal landscape, with little transparency and accountability and huge risks for freedom of expression and information, especially with respect to content regulation (Jørgensen et al.). The need for a pragmatic approach to the large volume of take-down requests requiring complex legal assessments to ensure lawful limitations under international human rights law continues to baffle policy-makers and scholars alike.

Getting a full picture of current national standards and practices with respect to freedom of expression online across EU Member States presents huge challenges in itself. In particular general and specific legislation regulating measures of blocking, filtering and removal of internet content, raise considerable questions from a human rights perspective.[4] On one hand, there appears to be general international consensus that the legal framework authorizing restrictive measures should be foreseeable, accessible, precise and clear with regard to the application of restrictive measures, afford sufficient protection against arbitrary interferences by public authorities; it should provide for a determination of the scope of restrictive measures by a judicial authority or an independent body, and provide for an effective judicial review of the proportionality of restrictive measures, including an assessment whether the restrictive measure is the least far-reaching measure to achieve the legitimate aim.[5] On the other, the question of procedural guarantees regulating blocking, filtering and take down of internet content and for instance whether the administrative blocking of websites in cases of terrorist incitement is appropriate under international human rights law remains hotly debated.[6] Few comprehensive comparative studies have been carried out to date including by Freedom House (Freedom of the Net, but covering only a few EU Member States)[7] and the 2015 Council of Europe Comparative study on blocking, filtering and take-down of illegal internet content, commissioned to the Swiss Institute of Comparative Law.[8]

The 2017 Media Pluralism Monitor aims to help fill this gap by also attempting to monitor the online dimension of freedom of expression. Yet the complexities of measuring these aspects were also reflected in the results of the 2017 Media Pluralism Monitor. Three questions attempt to measure freedom of expression online in each EU Member State. The first asks whether restrictions upon freedom of expression online are clearly defined in law in accordance with international and regional human rights standards and whether the restrictions to freedom of expression online are ‘proportionate’ to the legitimate aim pursued’. The subsequent two questions on the practice ask whether the state and ISPs respectively generally refrain from filtering and/or/ monitoring and/or blocking and/or removing online content in an arbitrary way?’. Overall the results of the MPM2017 do not point in the direction of an increased risk for freedom of expression online, when compared to the offline dimension. With a few notable exceptions most countries appear to score the same along the two dimensions either because they lack specific legislation applying to the online aspects of freedom of expression, or more generally because the two dimensions appear to be moving hand in hand. However, the information provided by country teams for the MPM2017 also points to the complexities of measuring such laws and practices, especially when laws on filtering/blocking/removing online content are fragmented across different sectors of law, combined with widespread practices self-regulation by the private sector. While the procedures filtering/blocking/removing online content generally vary depending on the nature of the material in question, different legal systems present a variety of approaches to regulating illegal online content, also under the influence of relevant EU sector-specific legislation.

However, two worrying legislative trends emerge from the MPM 2017 and should be highlighted in this context. First, there appears to be a move towards new ‘surveillance laws’ being adopted in EU countries (e.g. France, Germany, Netherlands, Poland, United Kingdom), which were captured under the MPM2017 question of whether “the state generally refrains from monitoring online content in an arbitrary way”, but also by the question asking whether there are “threats to the digital safety of journalists”. As is well known, mass surveillance laws pose particular risks to freedom of expression, including in relation to the protection of journalist sources, as well as to privacy rights. Second, the tendency towards states increasingly calling upon internet intermediaries to regulate internet content reached its peak with the contested German Network Enforcement Act (NetzDG), which was passed in June 2017. The law requires ‘Social Networks’ inter alia to promptly remove content that violates provisions of the German Criminal Code, and imposes severe administrative fines when companies fail to do so. The law has been widely criticized by national and international organizations for its freedom of expression implications.[9]

[1] Directive 2000/31/EC of the European Parliament and of the Council of 8 June 2000 on certain legal aspects of information society services, in particular electronic commerce, in the Internal Market (‘Directive on electronic commerce’), Article 15.

[2] Scarlet v Sabam 2011, Sabam v Netlog 2012.

[3] Proposal for a Directive amending the Audiovisual Media Services Directive, Proposal for a Directive of the European Parliament and of the Council on copyright in the Digital Single Market

[4] ‘Report of the Special Rapporteur on human rights and counter-terrorism’, UN Doc. A/HRC/31/65, paras. 38–40.

[5] See for instance (2015), ‘State of democracy, human rights and the rule of law in Europe’, Report by the Secretary General of the Council of Europe, https://edoc.coe.int/en/index.php?controller=get-file&freeid=6455, accessed 17 October 2017.

[6] E.g., see OHCHR (2016), ‘UN rights experts urge France to protect fundamental freedoms while countering terrorism’, 19 January, https://www.ohchr.org/EN/NewsEvents/Pages/DisplayNews.aspx?NewsID=16966&LangID=E#sthash.bCaO9ncL.dpuf, accessed 17 October 2017.

[7] https://freedomhouse.org/report-types/freedom-net

[8] See https://edoc.coe.int/en/internet/7289-pdf-comparative-study-on-blocking-filtering-and-take-down-of-illegal-internet-content-.html

[9] E.g. see https://www.article19.org/wp-content/uploads/2017/09/170901-Legal-Analysis-German-NetzDG-Act.pdf, https://www.hrw.org/news/2018/02/14/germany-flawed-social-media-law.