The power remains with platforms?

Written by Iva Nenadić, Research Fellow, Centre for Media Pluralism and Media Freedom

Over the past two decades, the media and information landscape has undergone a significant transformation, driven largely by technology companies that increasingly shape the information environment and civic discourse. They influence this landscape through the design and business models of their services, as well as through their content moderation practices. Due to their vast global reach, a small number of platforms provided by tech companies like Meta, TikTok, and X (Twitter) have become central places for citizens to engage with news and political discussions. This shift has impacted the economic sustainability of the media, their democratic role, and how they are understood conceptually. The relationship between media and digital platforms is a case of power imbalance that may harm or may have already harmed democracy. Platforms exert disproportionate influence over the reach and visibility of journalistic content, profiting from its distribution, while often neither compensating fairly nor recognising the unique role that quality journalism plays in society.

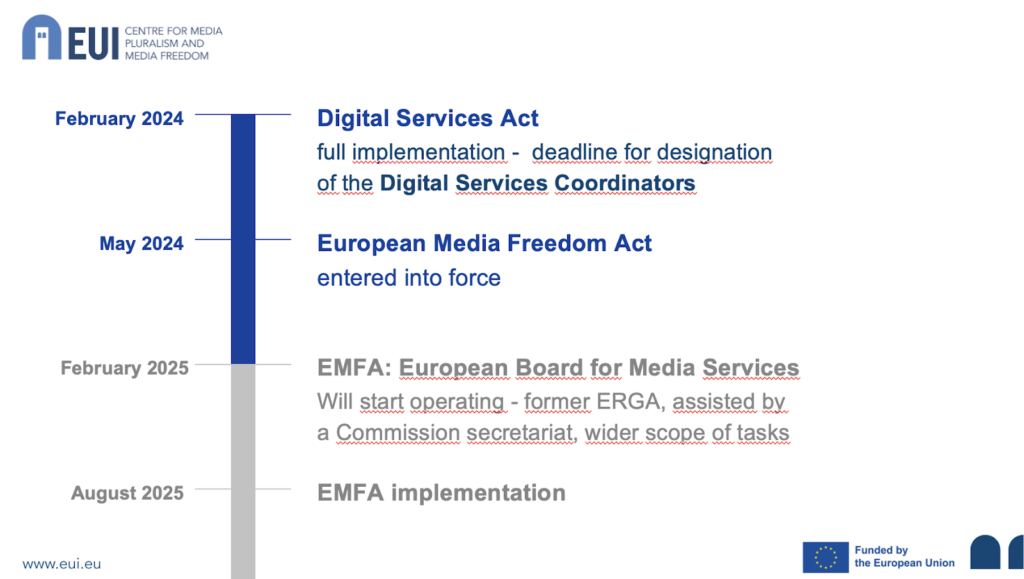

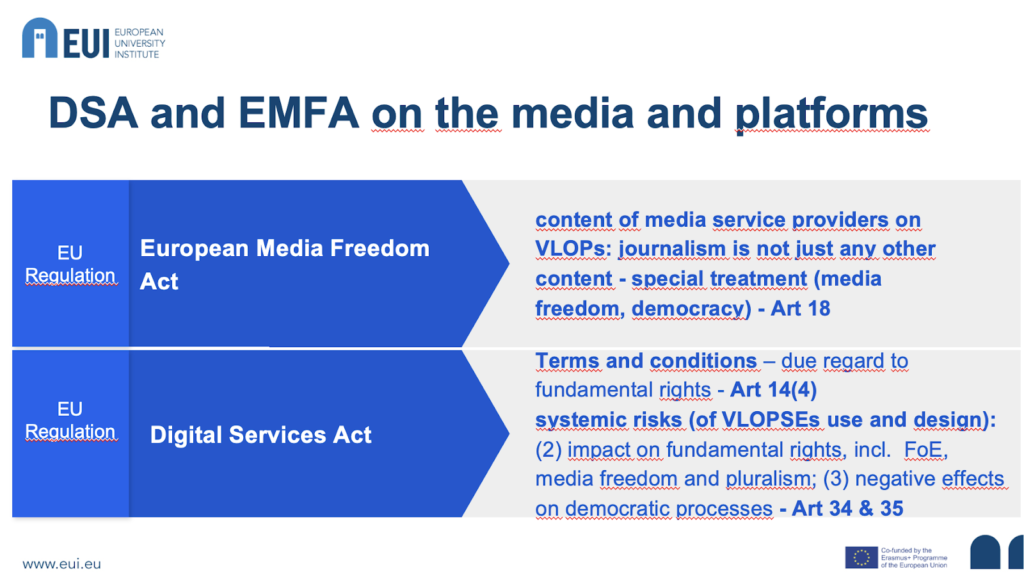

The need to safeguard media freedom and pluralism in the context of very large online platforms (VLOPs) is addressed by both the European Media Freedom Act (EMFA) and the Digital Services Act (DSA). The DSA has been fully implemented since February 2024, while the EMFA will reach the implementation stage in the summer of 2025. As national and European systems prepare for the EMFA’s rollout, this blog post outlines the interplay between these two EU regulations regarding media freedom and pluralism. Both laws establish EU platforms for regulators to cooperate and advise the Commission on relevant matters: the European Board for Digital Services under the DSA and the European Board for Media Services under the EMFA. Due to the complexity and interplay between the EMFA and the DSA, particularly regarding fundamental rights and understanding of the systemic risks deriving from platforms’ design and use, competent authorities will play a crucial role in the process of enforcement, as elaborated in our earlier blog post.

Already in the recitals, the DSA emphasises the need for VLOPs to pay due regard to freedom of expression and of information, including media freedom and pluralism, when enforcing measures requested by the DSA. More specifically, Article 14 on transparency, accessibility, and application of Terms and Conditions requires in its paragraph 4 “due regard to the rights and legitimate interests of all parties involved, including the fundamental rights of the recipients of the service, such as the freedom of expression, freedom and pluralism of the media, and other fundamental rights and freedoms as enshrined in the Charter.”

One of the key and novel aspects introduced by the DSA is the systemic risk assessment and mitigation (Articles 34 and 35). These provisions require very large online platforms and very large online search engines (together: VLOPSEs) to conduct regular assessment of actual or foreseeable systemic risks arising from the design, operation, or use of their services. Regular in this context means at least annually, or “before deploying functionalities that may critically impact identified risks”, and VLOPSEs are required to mitigate such risks (Art. 35). The DSA outlines four broad categories of systemic risks: (1) dissemination of illegal content; (2) negative effects on fundamental rights, such as freedom of expression and media freedom; (3) negative effects on civic discourse, electoral processes, and public security; and (4) negative effects related to gender-based violence, public health, and minors.

The mitigation measures, according to the DSA, should be reasonable, proportionate, and effective, while also considering their impact on fundamental rights. However, this is a new area of action for both platforms and the European Commission, which oversees and enforces the DSA, particularly regarding systemic risk assessment and mitigation. The responsibility for operationalising above listed broad and complex risk categories and determining the standards for identifying systemic risks largely falls on the platforms, at least in the early stages of the DSA’s implementation. Since platforms are for-profit entities that previously failed to safeguard fundamental rights and media freedom on their services, there is significant concern about entrusting them with such critical tasks. Moreover, as the definition and framing of systemic risks under the DSA determines the application of Article 18 of the EMFA, which provides for special treatment of media content in content moderation by VLOPs.

Several oversight mechanisms are contained in the DSA, including annual audits (Article 37). The European Commission has adopted a Delegated Regulation to provide additional guidelines on performance of audits on VLOPSEs, including a methodologies for auditing compliance with systemic risk assessment and mitigation (Articles 13 and 14 of the Delegated Regulation). However, in the first year of the DSA’s implementation the audit methodology on specific systemic risks remains unclear, as well as the VLOPSE’s assessments of such risks. Audit reports are foreseen to be shared with both the European Commission and the Digital Services Coordinator of the platform’s EU establishment (a designated DSA regulatory authority in a country where a platform has its EU residence), detailing the results of the risk assessment and the mitigation measures implemented. However, such reports will not be in the public domain. Some insights related to the systemic risk may be available from the transparency reports that VLOPSEs are obliged to publish every six months (Art 42). The DSA explicitly requires VLOPSEs to provide researchers with access to data necessary for monitoring and assessing compliance with the DSA, particularly in relation to systemic risk assessment and mitigation (Article 40). This marks significant progress in addressing the information asymmetry between platforms and other stakeholders, offering great potential for a deeper understanding of the various phenomena shaping today’s information spaces. However, access to this data is framed by how systemic risks are defined and operationalised, and by who is responsible for doing so. As already noted, the definition and framing of systemic risks under the DSA directly influence the application of Article 18 of the EMFA, which introduces a principle that editorially independent media service providers and journalistic content that abides to professional and ethical standards deserves special attention in content moderation by large platforms who operate without editorial responsibility, and with very limited liability.

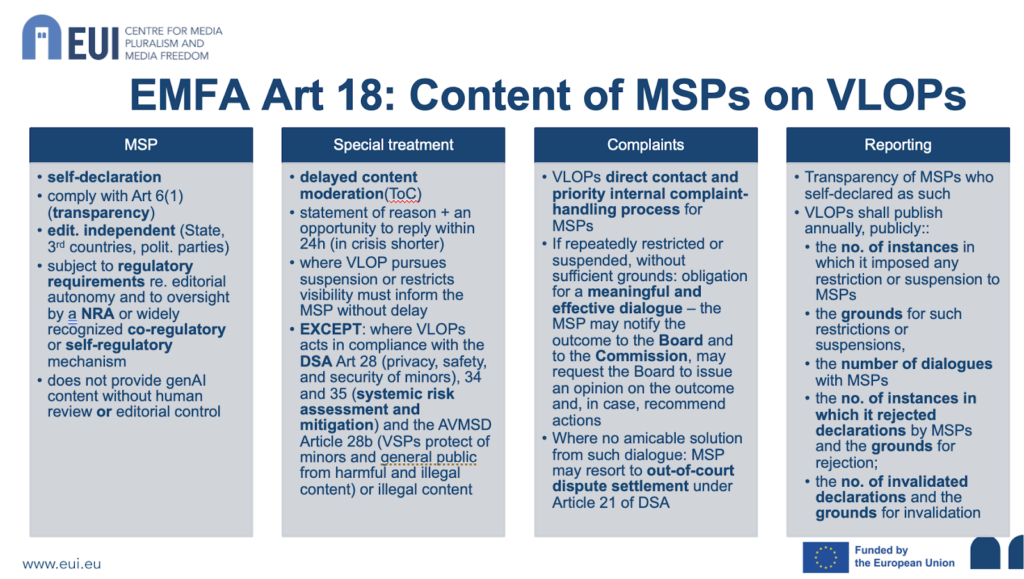

Article 18 of the EMFA requires VLOPs to provide a functionality allowing media service providers (MSPs) to self-declare as such, provided they comply with the transparency requirements of Article 6 and maintain editorial independence. For the first time, this pioneering EU regulation on media freedom explicitly recognises self-regulation as equally valid to regulatory authorities in confirming whether an MSP adheres to principles of editorial responsibility and widely recognised editorial standards. Under Article 18, before a VLOP suspends or restricts the visibility of content from a self-declared media service provider, it must provide a statement explaining the decision and allow the media to respond within 24 hours. If the platform proceeds with the action, it must promptly inform the media service provider of the final decision. The provision mandates transparency of MSPs who benefit from such special treatment, as well as transparency from VLOPs regarding their content moderation of the media content and the implementation of this rule. This represents a significant step forward, particularly for smaller media outlets and those in smaller EU countries, which were often overlooked by platforms and lacked direct communication channels. The new requirement for VLOPs to provide contact details should enable media outlets to quickly communicate with platforms when they believe their content has been unjustifiably removed or its visibility reduced, affecting their advertising revenue and already fragile business models. However, the specific procedure for delayed content moderation for media applies only when VLOPs take action based on their Terms and Conditions. It does not apply when platforms act within the scope of systemic risk mitigation, or in relation to the protection of minors, privacy, and broader safety concerns (as outlined in Article 28 of the DSA and Article 28b of the Audiovisual Media Services Directive).

The interplay between the European Media Freedom Act and the Digital Services Act represents a significant evolution in how very large online platforms are expected to safeguard media freedom and handle media content in Europe. However, the road between normative requirements and practical implementation is not always straightforward. The enforcement of Article 18 of the EMFA could face the challenges of misuse by malicious actors, including state-controlled or propagandistic outlets, to more effectively spread disinformation and propaganda. Additionally, the application of this special treatment for media in content moderation by platforms is framed by the interpretation and operationalisation of systemic risks under the DSA.

The current lack of clear methodologies, benchmarks, and approaches for assessing and mitigating systemic risks while protecting fundamental rights presents a risk in itself. Yet, it also offers an opportunity to deepen understanding of the challenges in balancing democratic information spaces with online safety. If oversight mechanisms, data access, transparency, structured dialogues and consultations with stakeholders and experts—provided under both the DSA and EMFA—are well-coordinated and used, they can help address these challenges effectively. To that end, investing in research and strengthening civil society are essential.

This work in progress was presented at the “European conference: Self-regulation and Regulation in the Media Sector” organised by the European Federation of Journalists on October 14, 2024 in Brussels. A more comprehensive analysis is forthcoming in co-authorship with Elda Brogi as a chapter in Cambridge Handbook of Media Law and Practice in Europe, edited by Kristina Irion, Tarlach McGonagle, and Martin Senftleben.